Logic

| Philosophy |

|---|

|

Traditions

|

|

Eras

|

|

Literature

Aesthetics · Epistemology

Ethics · Logic · Metaphysics Political philosophy |

|

Branches

|

|

Lists

|

| Portal |

In philosophy, Logic (from the Greek λογική logikē)[1] is the formal systematic study of the principles of valid inference and correct reasoning. Logic is used in most intellectual activities, but is studied primarily in the disciplines of philosophy, mathematics, semantics, and computer science. It examines general forms which arguments may take, which forms are valid, and which are fallacies. In philosophy, the study of logic is applied in most major areas: ontology, epistemology, ethics, metaphysics. In mathematics, it is the study of valid inferences within some formal language.[2] Logic is also studied in argumentation theory.[3]

Logic was studied in several ancient civilizations, including the Indian subcontinent,[4] China[5] and Greece. Logic was established as a discipline by Aristotle, who gave it a fundamental place in philosophy. The study of logic was part of the classical trivium, which also included grammar and rhetoric.

Logic is often divided into two parts, inductive reasoning and deductive reasoning.

Contents |

The study of logic

| “ | Upon this first, and in one sense this sole, rule of reason, that in order to learn you must desire to learn, and in so desiring not be satisfied with what you already incline to think, there follows one corollary which itself deserves to be inscribed upon every wall of the city of philosophy: Do not block the way of inquiry. | ” |

|

—Charles Saunders Peirce, "First Rule of Logic") |

||

The concept of logical form is central to logic, it being held that the validity of an argument is determined by its logical form, not by its content. Traditional Aristotelian syllogistic logic and modern symbolic logic are examples of formal logics.

- Informal logic is the study of natural language arguments. The study of fallacies is an especially important branch of informal logic. The dialogues of Plato[6] are good examples of informal logic.

- Formal logic is the study of inference with purely formal content. An inference possesses a purely formal content if it can be expressed as a particular application of a wholly abstract rule, that is, a rule that is not about any particular thing or property. The works of Aristotle contain the earliest known formal study of logic. Modern formal logic follows and expands on Aristotle.[7] In many definitions of logic, logical inference and inference with purely formal content are the same. This does not render the notion of informal logic vacuous, because no formal logic captures all of the nuance of natural language.

- Symbolic logic is the study of symbolic abstractions that capture the formal features of logical inference.[8][9] Symbolic logic is often divided into two branches: propositional logic and predicate logic.

- Mathematical logic is an extension of symbolic logic into other areas, in particular to the study of model theory, proof theory, set theory, and recursion theory.

Logical form

Logic is generally accepted to be formal, in that it aims to analyze and represent the form (or logical form) of any valid argument type. The form of an argument is displayed by representing its sentences in the formal grammar and symbolism of a logical language to make its content usable in formal inference. If one considers the notion of form to be too philosophically loaded, one could say that formalizing is nothing else than translating English sentences into the language of logic.

This is known as showing the logical form of the argument. It is necessary because indicative sentences of ordinary language show a considerable variety of form and complexity that makes their use in inference impractical. It requires, first, ignoring those grammatical features which are irrelevant to logic (such as gender and declension if the argument is in Latin), replacing conjunctions which are not relevant to logic (such as 'but') with logical conjunctions like 'and' and replacing ambiguous or alternative logical expressions ('any', 'every', etc.) with expressions of a standard type (such as 'all', or the universal quantifier ∀).

Second, certain parts of the sentence must be replaced with schematic letters. Thus, for example, the expression 'all As are Bs' shows the logical form which is common to the sentences 'all men are mortals', 'all cats are carnivores', 'all Greeks are philosophers' and so on.

That the concept of form is fundamental to logic was already recognized in ancient times. Aristotle uses variable letters to represent valid inferences in Prior Analytics, leading Jan Łukasiewicz to say that the introduction of variables was 'one of Aristotle's greatest inventions'.[10] According to the followers of Aristotle (such as Ammonius), only the logical principles stated in schematic terms belong to logic, and not those given in concrete terms. The concrete terms 'man', 'mortal', etc., are analogous to the substitution values of the schematic placeholders 'A', 'B', 'C', which were called the 'matter' (Greek 'hyle') of the inference.

The fundamental difference between modern formal logic and traditional or Aristotelian logic lies in their differing analysis of the logical form of the sentences they treat.

- In the traditional view, the form of the sentence consists of (1) a subject (e.g. 'man') plus a sign of quantity ('all' or 'some' or 'no'); (2) the copula which is of the form 'is' or 'is not'; (3) a predicate (e.g. 'mortal'). Thus: all men are mortal. The logical constants such as 'all', 'no' and so on, plus sentential connectives such as 'and' and 'or' were called 'syncategorematic' terms (from the Greek 'kategorei' – to predicate, and 'syn' – together with). This is a fixed scheme, where each judgement has an identified quantity and copula, determining the logical form of the sentence.

- According to the modern view, the fundamental form of a simple sentence is given by a recursive schema, involving logical connectives, such as a quantifier with its bound variable, which are joined to by juxtaposition to other sentences, which in turn may have logical structure.

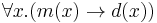

- The modern view is more complex, since a single judgement of Aristotle's system will involve two or more logical connectives. For example, the sentence "All men are mortal" involves in term logic two non-logical terms "is a man" (here M) and "is mortal" (here D): the sentence is given by the judgement A(M,D). In predicate logic the sentence involves the same two non-logical concepts, here analyzed as

and

and  , and the sentence is given by

, and the sentence is given by  , involving the logical connectives for universal quantification and implication.

, involving the logical connectives for universal quantification and implication. - But equally, the modern view is more powerful: medieval logicians recognized the problem of multiple generality, where Aristotelean logic is unable to satisfactorily render such sentences as "Some guys have all the luck", because both quantities "all" and "some" may be relevant in an inference, but the fixed scheme that Aristotle used allows only one to govern the inference. Just as linguists recognize recursive structure in natural languages, it appears that logic needs recursive structure.

Deductive and inductive reasoning, and retroductive inference

Deductive reasoning concerns what follows necessarily from given premises (if a, then b). However, inductive reasoning—the process of deriving a reliable generalization from observations—has sometimes been included in the study of logic. Correspondingly, we must distinguish between deductive validity and inductive validity (called "cogency"). An inference is deductively valid if and only if there is no possible situation in which all the premises are true but the conclusion false. An inductive argument can be neither valid nor invalid; its premises give only some degree of probability, but not certainty, to its conclusion.

The notion of deductive validity can be rigorously stated for systems of formal logic in terms of the well-understood notions of semantics. Inductive validity on the other hand requires us to define a reliable generalization of some set of observations. The task of providing this definition may be approached in various ways, some less formal than others; some of these definitions may use mathematical models of probability. For the most part this discussion of logic deals only with deductive logic.

Retroductive inference is a mode of reasoning that Peirce proposed as operating over and above induction and deduction to “open up new ground” in processes of theorizing (1911, p. 2). He defines retroduction as a logical inference that allows us to "render comprehensible" some observations/events which we perceive, by relating these back to a posited state of affairs that would help to shed light on the observations (Peirce, 1911, p. 2). He remarks that the “characteristic formula” of reasoning that he calls retroduction is that it involves reasoning from a consequent (any observed/experienced phenomena that confront us) to an antecedent (that is, a posited state of things that helps us to render comprehensible the observed phenomena). Or, as he otherwise puts it, it can be considered as “regressing from a consequent to a hypothetical antecedent” (1911, p. 4). See for instance, the discussion at: http://www.helsinki.fi/science/commens/dictionary.html

Some authors have suggested that this mode of inference can be used within social theorizing to postulate social structures/mechanisms that explain the way that social outcomes arise in social life and that in turn also indicate that these structures/mechanisms are alterable with sufficient social will (and visioning of alternatives). In other words, this logic is specifically liberative in that it can be used to point to transformative potential in our way of organizing our social existence by our re-examining/exploring the deep structures that generate outcomes (and life chances for people). In her book on New Racism (2010) Norma Romm offers an account of various interpretations of what can be said to be involved in retroduction as a form of inference and how this can also be seen to be linked to a style of theorizing (and caring) where processes of knowing (which she sees as dialogically rooted) are linked to social justice projects (http://www.springer.com/978-90-481-8727-0)

Consistency, validity, soundness, and completeness

Among the important properties that logical systems can have:

- Consistency, which means that no theorem of the system contradicts another.[11]

- Validity, which means that the system's rules of proof will never allow a false inference from true premises. A logical system has the property of soundness when the logical system has the property of validity and only uses premises that prove true (or, in the case of axioms, are true by definition).[11]

- Completeness, of a logical system, which means that if a formula is true, it can be proven (if it is true, it is a theorem of the system).

- Soundness, the term soundness has multiple separate meanings, which creates a bit of confusion throughout the literature. Most commonly, soundness refers to logical systems, which means that if some formula can be proven in a system, then it is true in the relevant model/structure (if A is a theorem, it is true). This is the converse of completeness. A distinct, peripheral use of soundness refers to arguments, which means that the premises of a valid argument are true in the actual world.

Some logical systems do not have all four properties. As an example, Kurt Gödel's incompleteness theorems show that sufficiently complex formal systems of arithmetic cannot be consistent and complete;[9] however, first-order predicate logics not extended by specific axioms to be arithmetic formal systems with equality can be complete and consistent.[12]

Rival conceptions of logic

Logic arose (see below) from a concern with correctness of argumentation. Modern logicians usually wish to ensure that logic studies just those arguments that arise from appropriately general forms of inference. For example, Thomas Hofweber writes in the Stanford Encyclopedia of Philosophy that logic "does not, however, cover good reasoning as a whole. That is the job of the theory of rationality. Rather it deals with inferences whose validity can be traced back to the formal features of the representations that are involved in that inference, be they linguistic, mental, or other representations".[2]

By contrast, Immanuel Kant argued that logic should be conceived as the science of judgment, an idea taken up in Gottlob Frege's logical and philosophical work, where thought (German: Gedanke) is substituted for judgment (German: Urteil). On this conception, the valid inferences of logic follow from the structural features of judgments or thoughts.

History

The earliest sustained work on the subject of logic is that of Aristotle.[13] Aristotelian logic became widely accepted in science and mathematics and remained in wide use in the West until the early 19th century.[14] Aristotle's system of logic was responsible for the introduction of hypothetical syllogism,[15] temporal modal logic,[16][17] and inductive logic.[18] In Europe during the later medieval period, major efforts were made to show that Aristotle's ideas were compatible with Christian faith. During the later period of the Middle Ages, logic became a main focus of philosophers, who would engage in critical logical analyses of philosophical arguments.

The Chinese logical philosopher Gongsun Long (ca. 325–250 BC) proposed the paradox "One and one cannot become two, since neither becomes two."[19] In China, the tradition of scholarly investigation into logic, however, was repressed by the Qin dynasty following the legalist philosophy of Han Feizi.

Logic in Islamic philosophy, particularly Avicenna's logic, was heavily influenced by Aristotelian logic.[20]

In India, innovations in the scholastic school, called Nyaya, continued from ancient times into the early 18th century with the Navya-Nyaya school. By the 16th century, it developed theories resembling modern logic, such as Gottlob Frege's "distinction between sense and reference of proper names" and his "definition of number," as well as the theory of "restrictive conditions for universals" anticipating some of the developments in modern set theory.[21] Since 1824, Indian logic attracted the attention of many Western scholars, and has had an influence on important 19th-century logicians such as Charles Babbage, Augustus De Morgan, and George Boole.[22] In the 20th century, Western philosophers like Stanislaw Schayer and Klaus Glashoff have explored Indian logic more extensively.

The syllogistic logic developed by Aristotle predominated in the West until the mid-19th century, when interest in the foundations of mathematics stimulated the development of symbolic logic (now called mathematical logic). In 1854, George Boole published An Investigation of the Laws of Thought on Which are Founded the Mathematical Theories of Logic and Probabilities, introducing symbolic logic and the principles of what is now known as Boolean logic. In 1879, Gottlob Frege published Begriffsschrift which inaugurated modern logic with the invention of quantifier notation. From 1910 to 1913, Alfred North Whitehead and Bertrand Russell published Principia Mathematica[8] on the foundations of mathematics, attempting to derive mathematical truths from axioms and inference rules in symbolic logic. In 1931, Gödel raised serious problems with the foundationalist program and logic ceased to focus on such issues.

The development of logic since Frege, Russell and Wittgenstein had a profound influence on the practice of philosophy and the perceived nature of philosophical problems (see Analytic philosophy), and Philosophy of mathematics. Logic, especially sentential logic, is implemented in computer logic circuits and is fundamental to computer science. Logic is commonly taught by university philosophy departments, often as a compulsory discipline.

Topics in logic

Syllogistic logic

The Organon was Aristotle's body of work on logic, with the Prior Analytics constituting the first explicit work in formal logic, introducing the syllogistic.[23] The parts of syllogistic logic, also known by the name term logic, are the analysis of the judgements into propositions consisting of two terms that are related by one of a fixed number of relations, and the expression of inferences by means of syllogisms that consist of two propositions sharing a common term as premise, and a conclusion which is a proposition involving the two unrelated terms from the premises.

Aristotle's work was regarded in classical times and from medieval times in Europe and the Middle East as the very picture of a fully worked out system. However, it was not alone: the Stoics proposed a system of propositional logic that was studied by medieval logicians. Also, the problem of multiple generality was recognised in medieval times. Nonetheless, problems with syllogistic logic were not seen as being in need of revolutionary solutions.

Today, some academics claim that Aristotle's system is generally seen as having little more than historical value (though there is some current interest in extending term logics), regarded as made obsolete by the advent of propositional logic and the predicate calculus. Others use Aristotle in argumentation theory to help develop and critically question argumentation schemes that are used in artificial intelligence and legal arguments.

Propositional logic (sentential logic)

A propositional calculus or logic (also a sentential calculus) is a formal system in which formulae representing propositions can be formed by combining atomic propositions using logical connectives, and in which a system of formal proof rules allows certain formulae to be established as "theorems".

Predicate logic

Predicate logic is the generic term for symbolic formal systems such as first-order logic, second-order logic, many-sorted logic, and infinitary logic.

Predicate logic provides an account of quantifiers general enough to express a wide set of arguments occurring in natural language. Aristotelian syllogistic logic specifies a small number of forms that the relevant part of the involved judgements may take. Predicate logic allows sentences to be analysed into subject and argument in several additional ways, thus allowing predicate logic to solve the problem of multiple generality that had perplexed medieval logicians.

The development of predicate logic is usually attributed to Gottlob Frege, who is also credited as one of the founders of analytical philosophy, but the formulation of predicate logic most often used today is the first-order logic presented in Principles of Mathematical Logic by David Hilbert and Wilhelm Ackermann in 1928. The analytical generality of predicate logic allowed the formalisation of mathematics, drove the investigation of set theory, and allowed the development of Alfred Tarski's approach to model theory. It provides the foundation of modern mathematical logic.

Frege's original system of predicate logic was second-order, rather than first-order. Second-order logic is most prominently defended (against the criticism of Willard Van Orman Quine and others) by George Boolos and Stewart Shapiro.

Modal logic

In languages, modality deals with the phenomenon that sub-parts of a sentence may have their semantics modified by special verbs or modal particles. For example, "We go to the games" can be modified to give "We should go to the games", and "We can go to the games"" and perhaps "We will go to the games". More abstractly, we might say that modality affects the circumstances in which we take an assertion to be satisfied.

The logical study of modality dates back to Aristotle,[24] who was concerned with the alethic modalities of necessity and possibility, which he observed to be dual in the sense of De Morgan duality. While the study of necessity and possibility remained important to philosophers, little logical innovation happened until the landmark investigations of Clarence Irving Lewis in 1918, who formulated a family of rival axiomatizations of the alethic modalities. His work unleashed a torrent of new work on the topic, expanding the kinds of modality treated to include deontic logic and epistemic logic. The seminal work of Arthur Prior applied the same formal language to treat temporal logic and paved the way for the marriage of the two subjects. Saul Kripke discovered (contemporaneously with rivals) his theory of frame semantics which revolutionised the formal technology available to modal logicians and gave a new graph-theoretic way of looking at modality that has driven many applications in computational linguistics and computer science, such as dynamic logic.

Informal reasoning

The motivation for the study of logic in ancient times was clear: it is so that one may learn to distinguish good from bad arguments, and so become more effective in argument and oratory, and perhaps also to become a better person. Half of the works of Aristotle's Organon treat inference as it occurs in an informal setting, side by side with the development of the syllogistic, and in the Aristotelian school, these informal works on logic were seen as complementary to Aristotle's treatment of rhetoric.

This ancient motivation is still alive, although it no longer takes centre stage in the picture of logic; typically dialectical logic will form the heart of a course in critical thinking, a compulsory course at many universities.

Argumentation theory is the study and research of informal logic, fallacies, and critical questions as they relate to every day and practical situations. Specific types of dialogue can be analyzed and questioned to reveal premises, conclusions, and fallacies. Argumentation theory is now applied in artificial intelligence and law.

Mathematical logic

Mathematical logic really refers to two distinct areas of research: the first is the application of the techniques of formal logic to mathematics and mathematical reasoning, and the second, in the other direction, the application of mathematical techniques to the representation and analysis of formal logic.[25]

The earliest use of mathematics and geometry in relation to logic and philosophy goes back to the ancient Greeks such as Euclid, Plato, and Aristotle.[26] Many other ancient and medieval philosophers applied mathematical ideas and methods to their philosophical claims.[27]

One of the boldest attempts to apply logic to mathematics was undoubtedly the logicism pioneered by philosopher-logicians such as Gottlob Frege and Bertrand Russell: the idea was that mathematical theories were logical tautologies, and the programme was to show this by means to a reduction of mathematics to logic.[8] The various attempts to carry this out met with a series of failures, from the crippling of Frege's project in his Grundgesetze by Russell's paradox, to the defeat of Hilbert's program by Gödel's incompleteness theorems.

Both the statement of Hilbert's program and its refutation by Gödel depended upon their work establishing the second area of mathematical logic, the application of mathematics to logic in the form of proof theory.[28] Despite the negative nature of the incompleteness theorems, Gödel's completeness theorem, a result in model theory and another application of mathematics to logic, can be understood as showing how close logicism came to being true: every rigorously defined mathematical theory can be exactly captured by a first-order logical theory; Frege's proof calculus is enough to describe the whole of mathematics, though not equivalent to it. Thus we see how complementary the two areas of mathematical logic have been.

If proof theory and model theory have been the foundation of mathematical logic, they have been but two of the four pillars of the subject. Set theory originated in the study of the infinite by Georg Cantor, and it has been the source of many of the most challenging and important issues in mathematical logic, from Cantor's theorem, through the status of the Axiom of Choice and the question of the independence of the continuum hypothesis, to the modern debate on large cardinal axioms.

Recursion theory captures the idea of computation in logical and arithmetic terms; its most classical achievements are the undecidability of the Entscheidungsproblem by Alan Turing, and his presentation of the Church-Turing thesis.[29] Today recursion theory is mostly concerned with the more refined problem of complexity classes — when is a problem efficiently solvable? — and the classification of degrees of unsolvability.[30]

Philosophical logic

Philosophical logic deals with formal descriptions of natural language. Most philosophers assume that the bulk of "normal" proper reasoning can be captured by logic, if one can find the right method for translating ordinary language into that logic. Philosophical logic is essentially a continuation of the traditional discipline that was called "Logic" before the invention of mathematical logic. Philosophical logic has a much greater concern with the connection between natural language and logic. As a result, philosophical logicians have contributed a great deal to the development of non-standard logics (e.g., free logics, tense logics) as well as various extensions of classical logic (e.g., modal logics), and non-standard semantics for such logics (e.g., Kripke's technique of supervaluations in the semantics of logic).

Logic and the philosophy of language are closely related. Philosophy of language has to do with the study of how our language engages and interacts with our thinking. Logic has an immediate impact on other areas of study. Studying logic and the relationship between logic and ordinary speech can help a person better structure his own arguments and critique the arguments of others. Many popular arguments are filled with errors because so many people are untrained in logic and unaware of how to formulate an argument correctly.

Logic and computation

Logic cut to the heart of computer science as it emerged as a discipline: Alan Turing's work on the Entscheidungsproblem followed from Kurt Gödel's work on the incompleteness theorems, and the notion of general purpose computers that came from this work was of fundamental importance to the designers of the computer machinery in the 1940s.

In the 1950s and 1960s, researchers predicted that when human knowledge could be expressed using logic with mathematical notation, it would be possible to create a machine that reasons, or artificial intelligence. This turned out to be more difficult than expected because of the complexity of human reasoning. In logic programming, a program consists of a set of axioms and rules. Logic programming systems such as Prolog compute the consequences of the axioms and rules in order to answer a query.

Today, logic is extensively applied in the fields of Artificial Intelligence, and Computer Science, and these fields provide a rich source of problems in formal and informal logic. Argumentation theory is one good example of how logic is being applied to artificial intelligence. The ACM Computing Classification System in particular regards:

- Section F.3 on Logics and meanings of programs and F.4 on Mathematical logic and formal languages as part of the theory of computer science: this work covers formal semantics of programming languages, as well as work of formal methods such as Hoare logic

- Boolean logic as fundamental to computer hardware: particularly, the system's section B.2 on Arithmetic and logic structures, relating to operatives AND, NOT, and OR;

- Many fundamental logical formalisms are essential to section I.2 on artificial intelligence, for example modal logic and default logic in Knowledge representation formalisms and methods, Horn clauses in logic programming, and description logic.

Furthermore, computers can be used as tools for logicians. For example, in symbolic logic and mathematical logic, proofs by humans can be computer-assisted. Using automated theorem proving the machines can find and check proofs, as well as work with proofs too lengthy to be written out by hand.

Controversies

Just as there is disagreement over what logic is about, so there is disagreement about what logical truths there are.

Bivalence and the law of the excluded middle

The logics discussed above are all "bivalent" or "two-valued"; that is, they are most naturally understood as dividing propositions into true and false propositions. Non-classical logics are those systems which reject bivalence.

Hegel developed his own dialectic logic that extended Kant's transcendental logic but also brought it back to ground by assuring us that "neither in heaven nor in earth, neither in the world of mind nor of nature, is there anywhere such an abstract 'either–or' as the understanding maintains. Whatever exists is concrete, with difference and opposition in itself".[31]

In 1910 Nicolai A. Vasiliev rejected the law of excluded middle and the law of contradiction and proposed the law of excluded fourth and logic tolerant to contradiction. In the early 20th century Jan Łukasiewicz investigated the extension of the traditional true/false values to include a third value, "possible", so inventing ternary logic, the first multi-valued logic.

Logics such as fuzzy logic have since been devised with an infinite number of "degrees of truth", represented by a real number between 0 and 1.[32]

Intuitionistic logic was proposed by L.E.J. Brouwer as the correct logic for reasoning about mathematics, based upon his rejection of the law of the excluded middle as part of his intuitionism. Brouwer rejected formalisation in mathematics, but his student Arend Heyting studied intuitionistic logic formally, as did Gerhard Gentzen. Intuitionistic logic has come to be of great interest to computer scientists, as it is a constructive logic, and is hence a logic of what computers can do.

Modal logic is not truth conditional, and so it has often been proposed as a non-classical logic. However, modal logic is normally formalised with the principle of the excluded middle, and its relational semantics is bivalent, so this inclusion is disputable.

"Is logic empirical?"

What is the epistemological status of the laws of logic? What sort of argument is appropriate for criticizing purported principles of logic? In an influential paper entitled "Is logic empirical?"[33] Hilary Putnam, building on a suggestion of W.V. Quine, argued that in general the facts of propositional logic have a similar epistemological status as facts about the physical universe, for example as the laws of mechanics or of general relativity, and in particular that what physicists have learned about quantum mechanics provides a compelling case for abandoning certain familiar principles of classical logic: if we want to be realists about the physical phenomena described by quantum theory, then we should abandon the principle of distributivity, substituting for classical logic the quantum logic proposed by Garrett Birkhoff and John von Neumann.[34]

Another paper by the same name by Sir Michael Dummett argues that Putnam's desire for realism mandates the law of distributivity.[35] Distributivity of logic is essential for the realist's understanding of how propositions are true of the world in just the same way as he has argued the principle of bivalence is. In this way, the question, "Is logic empirical?" can be seen to lead naturally into the fundamental controversy in metaphysics on realism versus anti-realism.

Implication: strict or material?

It is obvious that the notion of implication formalised in classical logic does not comfortably translate into natural language by means of "if… then…", due to a number of problems called the paradoxes of material implication.

The first class of paradoxes involves counterfactuals, such as "If the moon is made of green cheese, then 2+2=5", which are puzzling because natural language does not support the principle of explosion. Eliminating this class of paradoxes was the reason for C. I. Lewis's formulation of strict implication, which eventually led to more radically revisionist logics such as relevance logic.

The second class of paradoxes involves redundant premises, falsely suggesting that we know the succedent because of the antecedent: thus "if that man gets elected, granny will die" is materially true since granny is mortal, regardless of the man's election prospects. Such sentences violate the Gricean maxim of relevance, and can be modelled by logics that reject the principle of monotonicity of entailment, such as relevance logic.

Tolerating the impossible

Hegel was deeply critical of any simplified notion of the Law of Non-Contradiction. It was based on Leibniz's idea that this law of logic also requires a sufficient ground to specify from what point of view (or time) one says that something cannot contradict itself. A building, for example, both moves and does not move; the ground for the first is our solar system for the second the earth. In Hegelian dialectic, the law of non-contradiction, of identity, itself relies upon difference and so is not independently assertable.

Closely related to questions arising from the paradoxes of implication comes the suggestion that logic ought to tolerate inconsistency. Relevance logic and paraconsistent logic are the most important approaches here, though the concerns are different: a key consequence of classical logic and some of its rivals, such as intuitionistic logic, is that they respect the principle of explosion, which means that the logic collapses if it is capable of deriving a contradiction. Graham Priest, the main proponent of dialetheism, has argued for paraconsistency on the grounds that there are in fact, true contradictions.[36]

Rejection of logical truth

The philosophical vein of various kinds of skepticism contains many kinds of doubt and rejection of the various bases upon which logic rests, such as the idea of logical form, correct inference, or meaning, typically leading to the conclusion that there are no logical truths. Observe that this is opposite to the usual views in philosophical skepticism, where logic directs skeptical enquiry to doubt received wisdoms, as in the work of Sextus Empiricus.

Friedrich Nietzsche provides a strong example of the rejection of the usual basis of logic: his radical rejection of idealisation led him to reject truth as a "mobile army of metaphors, metonyms, and anthropomorphisms—in short ... metaphors which are worn out and without sensuous power; coins which have lost their pictures and now matter only as metal, no longer as coins".[37] His rejection of truth did not lead him to reject the idea of either inference or logic completely, but rather suggested that "logic [came] into existence in man's head [out] of illogic, whose realm originally must have been immense. Innumerable beings who made inferences in a way different from ours perished".[38] Thus there is the idea that logical inference has a use as a tool for human survival, but that its existence does not support the existence of truth, nor does it have a reality beyond the instrumental: "Logic, too, also rests on assumptions that do not correspond to anything in the real world".[39]

This position held by Nietzsche however, has come under extreme scrutiny for several reasons. He fails to demonstrate the validity of his claims and merely asserts them rhetorically. Furthermore, his position has been claimed to be self-refuting by philosophers, such as Jürgen Habermas, who have accused Nietszche of not even having a coherent perspective let alone a theory of knowledge.[40] George Lukacs in his book The Destruction of Reason has asserted that "Were we to study Nietzsche’s statements in this area from a logico-philosophical angle, we would be confronted by a dizzy chaos of the most lurid assertions, arbitrary and violently incompatible".[41] Extreme skepticism such as that displayed by Nietzsche has not been met with much seriousness by analytic philosophers in the 20th century. Bertrand Russell famously referred to Nietzsche's claims as "empty words" in his book A History of Western Philosophy.[42]

See also

Notes

- ^ "possessed of reason, intellectual, dialectical, argumentative", also related to λόγος (logos), "word, thought, idea, argument, account, reason, or principle" (Liddell & Scott 1999; Online Etymology Dictionary 2001).

- ^ a b Hofweber, T. (2004). "Logic and Ontology". In Zalta, Edward N. Stanford Encyclopedia of Philosophy. http://plato.stanford.edu/entries/logic-ontology.

- ^ Cox, J. Robert; Willard, Charles Arthur, eds (1983). Advances in Argumentation Theory and Research. Southern Illinois University Press. ISBN 978-0809310500.

- ^ For example, Nyaya (syllogistic recursion) dates back 1900 years.

- ^ Mohists and the school of Names date back at 2200 years.

- ^ Plato (1976). Buchanan, Scott. ed. The Portable Plato. Penguin. ISBN 0-14-015040-4.

- ^ Aristotle (2001). "Posterior Analytics". In Mckeon, Richard. The Basic Works. Modern Library. ISBN 0-375-75799-6.

- ^ a b c Whitehead, Alfred North; Russell, Bertrand (1967). Principia Mathematica to *56. Cambridge University Press. ISBN 0-521-62606-4.

- ^ a b For a more modern treatment, see Hamilton, A. G. (1980). Logic for Mathematicians. Cambridge University Press. ISBN 0-521-29291-3.

- ^ Łukasiewicz, Jan (1957). Aristotle's syllogistic from the standpoint of modern formal logic (2nd ed.). Oxford University Press. p. 7. ISBN 978-0198241447.

- ^ a b Bergmann, Merrie, James Moor, and Jack Nelson. The Logic Book fifth edition. New York, NY: McGraw-Hill, 2009.

- ^ Mendelson, Elliott (1964). "Quantification Theory: Completeness Theorems". Introduction to Mathematical Logic. Van Nostrand. ISBN 0412808307.

- ^ E.g., Kline (1972, p.53) wrote "A major achievement of Aristotle was the founding of the science of logic".

- ^ "Aristotle", MTU Department of Chemistry.

- ^ Jonathan Lear (1986). "Aristotle and Logical Theory". Cambridge University Press. p.34. ISBN 0521311780

- ^ Simo Knuuttila (1981). "Reforging the great chain of being: studies of the history of modal theories". Springer Science & Business. p.71. ISBN 9027711259

- ^ Michael Fisher, Dov M. Gabbay, Lluís Vila (2005). "Handbook of temporal reasoning in artificial intelligence". Elsevier. p.119. ISBN 0444514937

- ^ Harold Joseph Berman (1983). "Law and revolution: the formation of the Western legal tradition". Harvard University Press. p.133. ISBN 0674517768

- ^ The four Catuṣkoṭi logical divisions are formally very close to the four opposed propositions of the Greek tetralemma, which in turn are analogous to the four truth values of modern relevance logic Cf. Belnap (1977); Jayatilleke, K. N., (1967, The logic of four alternatives, in Philosophy East and West, University of Hawaii Press).

- ^ Goodman, Lenn Evan (1992). Avicenna. Routledge. p. 184. ISBN 978-0415019293.

- ^ Kisor Kumar Chakrabarti (June 1976). "Some Comparisons Between Frege's Logic and Navya-Nyaya Logic". Philosophy and Phenomenological Research (International Phenomenological Society) 36 (4): 554–563. doi:10.2307/2106873. JSTOR 2106873. "This paper consists of three parts. The first part deals with Frege's distinction between sense and reference of proper names and a similar distinction in Navya-Nyaya logic. In the second part we have compared Frege's definition of number to the Navya-Nyaya definition of number. In the third part we have shown how the study of the so-called 'restrictive conditions for universals' in Navya-Nyaya logic anticipated some of the developments of modern set theory."

- ^ Jonardon Ganeri (2001). Indian logic: a reader. Routledge. pp. vii, 5, 7. ISBN 0700713069.

- ^ "Aristotle". Encyclopædia Britannica. http://www.britannica.com/EBchecked/topic/346217/history-of-logic/65920/Aristotle.

- ^ Peter Thomas Geach (1980). "Logic Matters". University of California Press. p.316. ISBN 0520038479

- ^ Stolyar, Abram A. (1983). Introduction to Elementary Mathematical Logic. Dover Publications. p. 3. ISBN 0-486-64561-4.

- ^ Barnes, Jonathan (1995). The Cambridge Companion to Aristotle. Cambridge University Press. p. 27. ISBN 0-521-42294-9.

- ^ Aristotle (1989). Prior Analytics. Hackett Publishing Co.. p. 115. ISBN 978-0872200647.

- ^ Mendelson, Elliott (1964). "Formal Number Theory: Gödel's Incompleteness Theorem". Introduction to Mathematical Logic. Monterey, Calif.: Wadsworth & Brooks/Cole Advanced Books & Software. OCLC 13580200.

- ^ Brookshear, J. Glenn (1989). "Computability: Foundations of Recursive Function Theory". Theory of computation: formal languages, automata, and complexity. Redwood City, Calif.: Benjamin/Cummings Pub. Co.. ISBN 0805301437.

- ^ Brookshear, J. Glenn (1989). "Complexity". Theory of computation: formal languages, automata, and complexity. Redwood City, Calif.: Benjamin/Cummings Pub. Co.. ISBN 0805301437.

- ^ Hegel, G. W. F (1971) [1817]. Philosophy of Mind. Encyclopedia of the Philosophical Sciences. trans. William Wallace. Oxford: Clarendon Press. p. 174. ISBN 0198750145.

- ^ Hájek, Petr (2006). "Fuzzy Logic". In Zalta, Edward N.. Stanford Encyclopedia of Philosophy. http://plato.stanford.edu/entries/logic-fuzzy/.

- ^ Putnam, H. (1969). "Is Logic Empirical?". Boston Studies in the Philosophy of Science 5.

- ^ Birkhoff, G.; von Neumann, J. (1936). "The Logic of Quantum Mechanics". Annals of Mathematics (Annals of Mathematics) 37 (4): 823–843. doi:10.2307/1968621. JSTOR 1968621.

- ^ Dummett, M. (1978). "Is Logic Empirical?". Truth and Other Enigmas. ISBN 0-674-91076-1.

- ^ Priest, Graham (2008). "Dialetheism". In Zalta, Edward N.. Stanford Encyclopedia of Philosophy. http://plato.stanford.edu/entries/dialetheism.

- ^ Nietzsche, 1873, On Truth and Lies in a Nonmoral Sense.

- ^ Nietzsche, 1882, The Gay Science.

- ^ Nietzsche, 1878, Human, All Too Human

- ^ Babette Babich, Habermas, Nietzsche, and Critical Theory

- ^ http://www.marxists.org/archive/lukacs/works/destruction-reason/ch03.htm

- ^ Bertrand Russell, A History of Western Philosophy http://www.amazon.com/History-Western-Philosophy-Bertrand-Russell/dp/0671201581

References

- Nuel Belnap, (1977). "A useful four-valued logic". In Dunn & Eppstein, Modern uses of multiple-valued logic. Reidel: Boston.

- Józef Maria Bocheński (1959). A précis of mathematical logic. Translated from the French and German editions by Otto Bird. D. Reidel, Dordrecht, South Holland.

- Józef Maria Bocheński, (1970). A history of formal logic. 2nd Edition. Translated and edited from the German edition by Ivo Thomas. Chelsea Publishing, New York.

- Brookshear, J. Glenn (1989). Theory of computation: formal languages, automata, and complexity. Redwood City, Calif.: Benjamin/Cummings Pub. Co.. ISBN 0805301437.

- Cohen, R.S, and Wartofsky, M.W. (1974). Logical and Epistemological Studies in Contemporary Physics. Boston Studies in the Philosophy of Science. D. Reidel Publishing Company: Dordrecht, Netherlands. ISBN 90-277-0377-9.

- Finkelstein, D. (1969). "Matter, Space, and Logic". in R.S. Cohen and M.W. Wartofsky (eds. 1974).

- Gabbay, D.M., and Guenthner, F. (eds., 2001–2005). Handbook of Philosophical Logic. 13 vols., 2nd edition. Kluwer Publishers: Dordrecht.

- Hilbert, D., and Ackermann, W, (1928). Grundzüge der theoretischen Logik (Principles of Mathematical Logic). Springer-Verlag. OCLC 2085765

- Susan Haack, (1996). Deviant Logic, Fuzzy Logic: Beyond the Formalism, University of Chicago Press.

- Hodges, W., (2001). Logic. An introduction to Elementary Logic, Penguin Books.

- Hofweber, T., (2004), Logic and Ontology. Stanford Encyclopedia of Philosophy. Edward N. Zalta (ed.).

- Hughes, R.I.G., (1993, ed.). A Philosophical Companion to First-Order Logic. Hackett Publishing.

- Kline, Morris (1972). Mathematical Thought From Ancient to Modern Times. Oxford University Press. ISBN 0-19-506135-7.

- Kneale, William, and Kneale, Martha, (1962). The Development of Logic. Oxford University Press, London, UK.

- Liddell, Henry George; Scott, Robert. "Logikos". A Greek-English Lexicon. Perseus Project. http://www.perseus.tufts.edu/cgi-bin/ptext?doc=Perseus%3Atext%3A1999.04.0057%3Aentry%3D%2363716. Retrieved 8 May 2009.

- Mendelson, Elliott, (1964). Introduction to Mathematical Logic. Wadsworth & Brooks/Cole Advanced Books & Software: Monterey, Calif. OCLC 13580200

- Harper, Robert (2001). "Logic". Online Etymology Dictionary. http://www.etymonline.com/index.php?term=logic. Retrieved 8 May 2009.

- Smith, B., (1989). "Logic and the Sachverhalt". The Monist 72(1):52–69.

- Whitehead, Alfred North and Bertrand Russell, (1910). Principia Mathematica. Cambridge University Press: Cambridge, England. OCLC 1041146

External links and further readings

| Book: Logic | |

| Wikipedia books are collections of articles that can be downloaded or ordered in print. | |

- LogicWiki, an external wiki

- Introductions and tutorials

- An Introduction to Philosophical Logic, by Paul Newall, aimed at beginners.

- forall x: an introduction to formal logic, by P.D. Magnus, covers sentential and quantified logic.

- Logic Self-Taught: A Workbook (originally prepared for on-line logic instruction).

- Nicholas Rescher. (1964). Introduction to Logic, St. Martin's Press.

- Essays

- "Symbolic Logic" and "The Game of Logic", Lewis Carroll, 1896.

- Math & Logic: The history of formal mathematical, logical, linguistic and methodological ideas. In The Dictionary of the History of Ideas.

- Reference material

- Translation Tips, by Peter Suber, for translating from English into logical notation.

- Ontology and History of Logic. An Introduction with an annotated bibliography.

- Reading lists

- The London Philosophy Study Guide offers many suggestions on what to read, depending on the student's familiarity with the subject:

|

|||||||||||||||||||||||||||||||||||||